A self-driving car is driving down the freeway when suddenly a ball comes onto the road, followed by a young child. The vehicle must slow immediately, and it does. Because of this rapid braking, there was no time for the vehicle to transmit a video of what happened to a server hundreds of miles away and then wait for instructions. So, how did the vehicle think that quickly?

This need for immediate reaction, which is the central issue for all smart robots, is based on the problem of sending data across the Internet at a speed that allows for a response from a remote source (robotics latency) — and, for a delivery drone trying to avoid a bird, or a robotic surgical instrument trying to make a precise incision — can mean the difference between success and disaster.

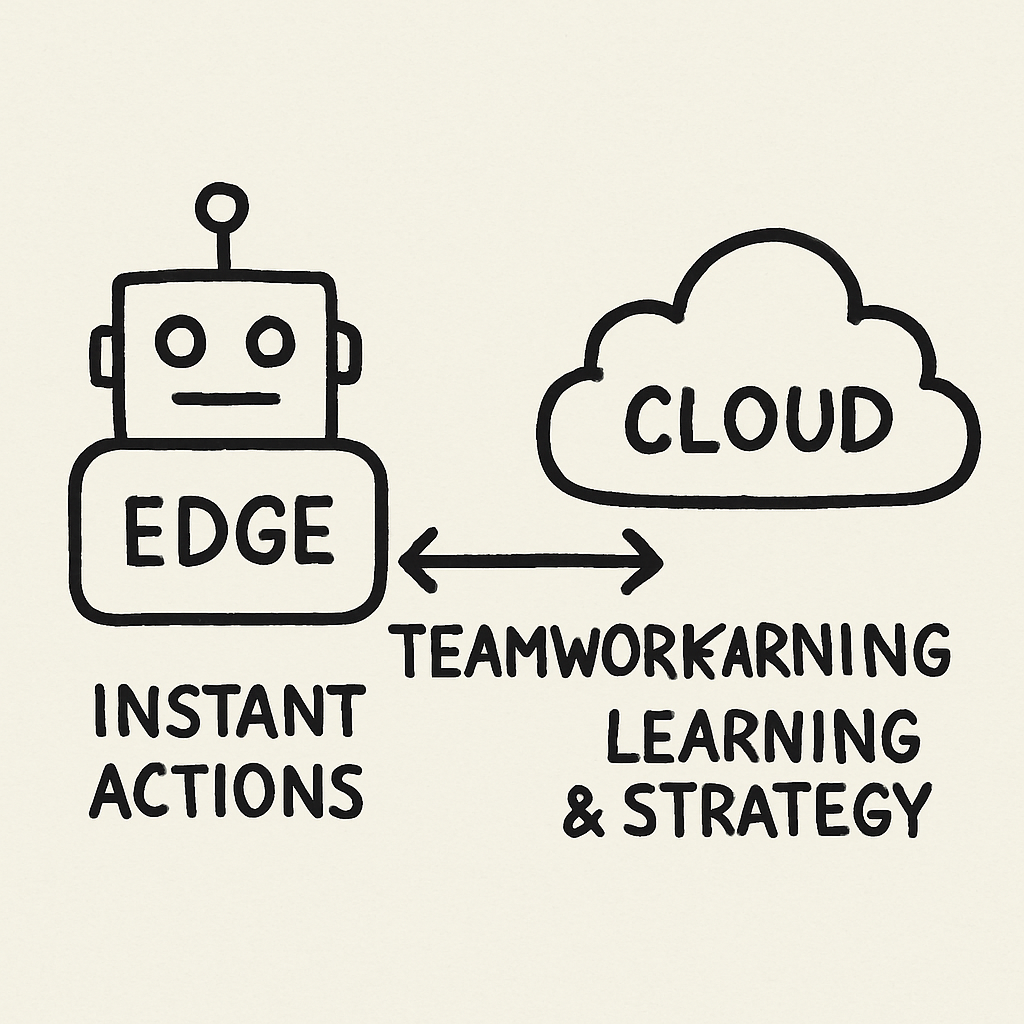

To enable real-time decision-making, a distributed model of robotic intelligence is employed. Instead of the robot’s “brain” being centralized in a single location, intelligence is distributed across multiple locations. A small portion of the robot’s intelligence — designed specifically for rapid decision-making and quick reflexes — resides with the robot (or device), while a large portion of the robot’s intelligence — designed for longer-term, more complex thinking and learning — resides in “the cloud”.

This powerful partnership is the fundamental principle on which hybrid cloud robotics relies and will enable the development of self-driving vehicles, highly efficient automated warehouses, and a new generation of intelligent technologies.

Hybrid Cloud Edge Robotics: Cloud Intelligence Meets Real-Time Robotic Action

Hybrid Cloud and Edge computing are revolutionizing how robots perceive their environment, navigate their surroundings, and improve their knowledge through learning. Using Hybrid Cloud Edge Robotics, you can provide Cloud-Scale intelligence to your robots and real-time control, enabling them to react quickly while retaining the power of large models, big data, and fleet-wide learning.

Simply stated, Hybrid Cloud Edge Robotics enables a robot’s “reflexes” to be located near the machine while its “brain growth” occurs across shared cloud resources. This combination is becoming increasingly important as robots transition from labs to warehouses, hospitals, streets, and factories, where milliseconds can make a difference.

The edge side of Hybrid Cloud Edge Robotics handles time-critical tasks: sensor fusion, obstacle avoidance, motion control loops, safety stops, and local perception updates. Cloud side of Hybrid Cloud Edge Robotics handles tasks that benefit from scale: model training, simulation, long-horizon planning, analytics, and improvements to policies across the fleet. Hybrid Cloud Edge Robotics helps manage cost because you can keep heavy training bursts in an elastic cloud infrastructure and run day-to-day inference and control on smaller edge hardware. If implemented correctly, the system remains responsive even when connectivity is lost.

Typically, an Architecture for Hybrid Cloud Edge Robotics consists of three layers (Robot, Edge Node, and Cloud). Each layer has its own functions: The Robot contains Cameras, Lidars, Encoders, and a processing module for immediate control over the robot’s movements.

Typically, the Edge Node is located in a facility rack or as part of a 5G / MEC site. It aggregates data from multiple robots, caches models, and provides low-latency services, including mapping, coordination, and video analytics. On the other hand, the Cloud provides centralized identity and device management, data lakes, pipelines, and more. Hybrid Cloud Edge Robotics becomes even stronger when each layer can communicate using consistent APIs, logging, and model versioning.

However, the true strength of Hybrid Cloud Edge Robotics lies in the data flow. Because sensor data volumes are large, it is both expensive and time-consuming to transmit all data to the cloud. Thus, edge pipelines filter and compress only the relevant information, including events, embeddings, anomalies, and short clips around incidents. This means that Hybrid Cloud Edge Robotics enables you to maintain sensitive data locally while generating valuable training datasets via de-identification, aggregation, or federated learning. Eventually, the cloud will enable better perception and decision-making models, and the edge will deploy them safely through staged rollouts, canary robots, and instant rollback.

Real-time action requires a high level of reliability. The Hybrid Cloud Edge Robotics solution degrades gracefully, maintaining robot operation when network performance is suboptimal. When a reliable network connection is available, robots may offload computationally intensive tasks to remote servers, receive updated routing information, and maintain continuous synchronization with their map data. As network performance degrades, the robots will operate autonomously without creating unsafe conditions.

To achieve this, Hybrid Cloud Edge Robotics typically uses local caching (with the most current model/policy), heartbeat monitoring to ensure communication, and “safe mode” functions that limit speed or cause the robot to revert to safe behavior. The result is maintaining productivity while enabling improvements in the robot’s capabilities upon network restoration.

Hybrid Cloud Edge Robotics is being applied to many use cases today. Warehouse applications use large fleets of mobile robots to manage traffic flow and optimize route selection through local decision-making, while the cloud is used to determine the optimal overall fleet configuration and to optimize warehouse throughput by learning from each shift. Manufacturing applications provide edge-based robotic arm precision control, while the cloud is used to analyze quality trend data and update machine-vision models across various manufacturing facilities.

Healthcare applications require service robots to make immediate navigation decisions while ensuring strict privacy protections for patient-adjacent sensor data. Hybrid Cloud Edge Robotics provides these capabilities by keeping all patient-adjacent sensing local while delivering cloud-based analysis for predictive maintenance and planning.

The security and governance aspects of Hybrid Cloud Edge Robotics must be implemented at the outset, not after development is complete. Because robotics is a cyber-physical system (CPS), any security compromise or issue within this CPS could result in physical harm. To protect against these scenarios, hybrid cloud edge robotics requires strong device identities, encryption, signed model artifacts, and role-based access controls as minimum requirements.

Additionally, Hybrid Cloud Edge Robotics must include an audit trail to document who deployed a particular model, when parameters were updated, and the rationale for a robot’s decision. There are additional policy considerations, such as defining which data may leave a site, how long data is retained, and how updates to a particular model are approved, particularly for organizations operating in regulated environments.

Hybrid Cloud Edge Robotics operations and monitoring represent the transition from a prototype to a production environment. For teams to successfully transition to production, they must have visibility into latency, battery health, motor temperature, sensor drift, and model performance. As a platform, Hybrid Cloud Edge Robotics offers a range of features, including fleet dashboards, over-the-air updates, remote debugging, and automatic incident capture and failure analysis to drive learning.

By maintaining proper observability of your environment, you can identify gradual camera degradation; detect lighting changes that affect a robot’s perception; and deploy targeted fixes to models quickly without stopping the entire fleet.

Looking ahead, Hybrid Cloud Edge Robotics will continue to combine classical control methods with edge AI and cloud-scale training. Larger foundation models will provide greater generalization capabilities for robots; however, due to the tight constraints of edge computing environments, efficient inference, quantization, and specialized accelerators will remain required.

Synthetic data pipelines, improved simulators, and digital twins that enable systems to learn in a safe environment before deployment in the physical world will also add value to Hybrid Cloud Edge Robotics. Ultimately, the central promise of Hybrid Cloud Edge Robotics remains the same: cloud-based intelligence for continuous improvement and edge-based execution for immediate, trustworthy action.

From Reflex to Reason: How Modern Robotics Thinks

The quick-thinking response from a self-driving vehicle may have appeared as though by magic. Yet, you now realize it is simply a partnership. Just as our own body is comprised of both quick reflexive responses to imminent threats (i.e., our nervous system) and the ability to think deeply and strategically to plan for the future (i.e., our cognitive abilities), smart machines too need to be able to respond quickly to immediate threats and strategically to problems they will face in the future. Therefore, you now have the confidence to explain that hybrid cloud robotics is built on the team effort central to collaboration.

Your next task is not to build a robot, but to begin viewing robots through this lens. Next time you see a delivery drone, a smart vacuum cleaner, or read about an automated checkout, attempt to identify the invisible “dance” that takes place. Ask yourself: What portion of the process is a quick reflex and what portion is a thoughtful process? Identifying the benefits of edge computing in action is a simple way to make this knowledge your own.

You now possess the ability to look at the constantly changing landscape of technology and look past the machine to understand the invisible systems that drive its intelligence. In short, you do not merely see the technology; you understand how it works.

The Problem of Delay: Why Robots Can’t Just Use the Internet for Everything

If the brain of your cloud is so good, why would you ever use anything else for the brain of your robot? One reason is the distance between the cloud and the robot. Even though it’s traveling at the speed of light, when you send a command to a server on another continent, or a city many miles away, there is a delay (called latency) before you get a response back from the server. If a self-driving vehicle sees a bicycle swerving into its path, a few milliseconds may mean the difference between successfully avoiding the bicycle and crashing into it.

The time it takes for a device to respond to commands from a remote server is called latency. You’ve probably felt this “lag” during video calls with friends who freeze mid-sentence. A frozen video call is annoying, whereas a latency issue in robots can result in a total failure. For example, if a delivery drone needs to avoid pedestrians on a busy street, a latency issue could cause it to crash into a person rather than safely avoid them.

It is clear that relying on the cloud as the sole source of intelligence will never be sufficient. The cloud is well-suited for large-scale learning and data analysis; however, cloud latency makes it difficult to meet the response-time requirements of real-world environments. Therefore, a new model was developed by equipping the robot with a high-speed nervous system (i.e., reflexes).

The Solution for Speed: Giving Robots ‘Reflexes’ with Edge Computing

Engineers discovered a solution to this delay problem. They knew the robot couldn’t wait for approval from a remote server. The solution was to use edge computing to enable the robot to “think” independently immediately.

Edge Computing is similar to the reflexes of your body. When you put your hand on a hot stove, your hand will pull away fast, even before you realize you are experiencing pain. In the same way, Edge Computing enables a small but powerful mini-brain to operate on the robot at the “edge” of the network to make instant decisions.

You may already be familiar with this technology. Smart vacuums don’t send videos to the internet and then ask whether they should go around a chair leg; they have built-in sensors that cause the vacuum to turn around in a fraction of a second. By operating on local data, the robot’s performance improves significantly. This turns a clumsy robot into a quick, agile assistant that can navigate complex environments with minimal guidance.

The mini-brain on the robot is well-suited for immediate responses, but it has limitations. This type of computer is used for speed rather than for storing large amounts of data for long periods or for heavy computing. For these types of tasks, our robot still needs to connect to the ‘big brain’ in the sky.

Robotics Edge Computing: Low-Latency Computing for Intelligent Robots

Robotics Edge Computing refers to the practice of placing high-priority robot workload(s) near the robot — on the robot, on an on-premise server, or on a local Edge Server. The primary objective of robotics Edge Computing is to reduce latency in processing robot sensory inputs and to improve the reliability of remotely operated robots. By doing so, it is possible to maintain a responsive robot that can react to people, objects, and other stimuli within milliseconds, thereby improving its ability to safely navigate and accurately manipulate objects in its environment.

One of the key benefits of Robotics Edge Computing is the ability to enable real-time perception and control. A continuous stream of data from cameras, Lidar, force sensors, and encoders must be processed as soon as it arrives. Robotics Edge Computing enables rapid sensor fusion, object detection, localization, path planning, and control-loop execution without round-trip communication to remote data centers. The lower latency of this local pipeline will improve robot safety (e.g., faster stop/avoidance behavior) and performance (e.g., smoother motion, reduced errors, increased productivity), while also improving overall efficiency and reducing operational costs.

Robotics Edge Computing can help reduce network bandwidth usage, and operational costs by instead of transmitting full motion video or full point clouds in the cloud, edge computing can be used to transmit event detection, anomaly detection, summary, and short clip data which will allow for greater scalability in fleets, and meet all privacy and regulatory requirements as sensitive data will remain on premise.

In addition, Robotics Edge Computing will support “Offline-First” operations for mission-critical robotics applications, allowing robots to continue performing their tasks even when disconnected from the cloud, and, once connectivity is restored, to synchronize log and metric data.

In most robotics use cases, Robotics Edge Computing can be used alongside Hybrid Cloud Edge Robotics. Edge resources handle time-sensitive tasks, while the cloud handles larger-scale workloads such as large-scale model training, simulation, analytics, and fleet-wide software updates. Using Hybrid Cloud Edge Robotics enables teams to continually update and deploy models across multiple robots while maintaining real-time responsiveness. In addition, Hybrid Cloud Edge Robotics enables centralized governance (Identity, Access, Signed Artifacts, Audit Trails) while maintaining each robot’s autonomy at the edge.

By combining Hybrid Cloud Edge Robotics with Robotics Edge Computing, teams can achieve an effective balance between fast local decision-making and scalable cloud-based intelligence. This results in robots that can react locally in real time, continually learn and adapt, and remain easily manageable as the number of deployed robots increases—exactly what today’s autonomous robotic systems require.

Edge Computing Robotics: Real-Time Intelligence Where Robots Operate

Edge computing robotics enables robots to process information locally—on the robot, at a nearby gateway, or on an on-site edge computer—to support near-instant decision-making and response times. In other words, with Edge Computing Robotics, perception, planning, and safety behaviors do not have to wait until they reach a remote datacenter before acting. For example, this would be particularly important if a robot were required to recognize that a person is approaching its path and then adjust the pressure it applies to a delicate product, or to navigate a narrow aisle quickly.

Edge Computing Robotics will support workloads such as sensor fusion, object detection, SLAM/localization, collision avoidance, and control-loop execution. All these workloads are time-critical and data-intensive (especially video and LiDAR), so Edge Computing Robotics enables these applications to run locally, reducing jitter and providing smoother, more predictable motion. Edge Computing Robotics also improves uptime: regardless of Wi-Fi drops or low-bandwidth conditions, the robot can operate autonomously using local processing resources.

Edge Computing Robotics enables smart data processing. The processing of raw sensor data does not need to occur in the cloud; the edge can process, filter, reduce data volume, and compress it into events, anomalies, clips, or feature embeddings. This reduces cloud costs and supports privacy requirements by enabling image processing to occur locally. The edge will monitor model drift, detect unusual conditions (e.g., light changes or floor glare), and flag data that require retraining over time.

Some teams are pairing Edge Computing Robotics with Hybrid Cloud Edge Robotics to achieve the best of both worlds: fast execution at the edge and scalable intelligence in the cloud. Hybrid Cloud Edge Robotics combines the cloud’s ability to perform heavy training, simulation, fleet analytics, and central management, while the edge performs immediate decision-making. Hybrid Cloud Edge Robotics also enables controlled model rollouts: test updates on a small number of robots first, evaluate performance, and quickly revert to previous model versions if necessary.

When implemented at a large scale with many robots, Edge Computing Robotics creates a coordination advantage. Each local edge node can coordinate traffic, share maps, and distribute workload among robots operating within the same facility. Additionally, Hybrid Cloud Edge Robotics unifies logging, policy enforcement, and security across all locations. If implemented correctly, Hybrid Cloud Edge Robotics, paired with Edge Computing Robotics, will enable robots to respond and improve continuously—real-time intelligence at the point of operation and continuous learning across all robots.

Edge AI Robotics: AI Decisions in Real Time

Edge AI Robotics refers to running an AI model on a robot’s sensors and actuators, enabling the robot to make decisions within milliseconds rather than seconds. This enables robots to detect people, identify objects, determine distance, and adjust their motion based on sensor input, all as part of safe navigation, smooth manipulation, and effective collaboration with humans. Edge AI Robotics also greatly reduces the need to stream raw video or LiDAR data to the cloud, lowering bandwidth costs and keeping sensitive information on-site.

Edge AI Robotics is commonly used in real-world applications to support perception (vision & depth), localization & mapping, anomaly detection, and on-device quality checks. Since the AI runs locally on the device, an application using Edge AI Robotics will continue to function as intended even if the Wi-Fi connection is unstable or internet connectivity is limited due to site restrictions. The ability to “continue to operate safely” is one of the primary reasons factories, warehouses, and hospitals are adopting Edge AI Robotics for daily automation.

However, robots continually evolve and improve, making Hybrid Cloud Edge Robotics relevant. A Hybrid Cloud Edge Robotics deployment provides the speed of edge-based inference and control, along with the scale and capabilities of the cloud, for training large models, running simulations, and managing fleets.

In a Hybrid Cloud Edge Robotics deployment, the edge executes latency-critical inference and control tasks, while the cloud trains larger models, runs simulations, and analyzes performance across multiple robots and sites. Hybrid Cloud Edge Robotics enables efficient software updates, model drift monitoring, and cross-environment result comparisons without disrupting real-time operations.

A useful model for implementation would be for Edge AI Robotics to process the data locally and send only a filtered and summarized version (the events, embeddings, failures, and the short clip) to the cloud as compact signal(s). Once the data reaches the Hybrid Cloud Edge Robotics environment, it can be used to retrain models, evaluate improvements, and provide signed model packages to the edge device(s) for deployment. Through a carefully planned rollout, Hybrid Cloud Edge Robotics could initially test updates on a small set of robot units, monitor safety-related metrics during testing, and, if an update regression occurred, revert to prior versions immediately.

Additionally, security and safety will also be key factors in both systems. The advantages of Edge AI Robotics include local policy enforcement and quick fallback behaviors, whereas Hybrid Cloud Edge Robotics provides centralized identity management, audit logs, and controlled access to models and telemetry. In summary, when combined, Edge AI Robotics and Hybrid Cloud Edge Robotics deliver a balanced robotic system that includes immediate autonomy at the edge, continuous learning in the cloud, and consistent operation of a growing fleet of robots. This is how the current generation of robotics transforms AI into real-time, trustworthy actions.

The ‘Big Brain’ in the Sky: What the Cloud Is Really For

Most people view the cloud as a digital filing cabinet for their photos and documents. But to a robot, the cloud is much closer to a highly advanced brain for learning. Once your reflexes have pulled your hand back from a hot stove (the edge), your actual brain (the cloud) will process what occurred. This processing results in learning and memory of the experience, so that the next time you are more cautious.

In the same way, the cloud handles the heavy lifting of decision-making and long-term memory for a robot. This is where the true magic occurs. The cloud is not simply analyzing data from a single robot; it can also aggregate experiences from multiple robots in a fleet. When a single delivery drone has difficulty navigating a new construction site, the system receives the data, analyzes it, and can transmit a software update to all other cloud-connected robots to immediately teach them the safest route. Therefore, one robot’s lessons become every robot’s knowledge.

The edge computer is responsible for the quick “don’t hit that wall” type decisions, while the cloud is responsible for the “how can we make all of the robots smarter tomorrow” type decisions. Due to limited processing capacity, a robot’s local brain cannot perform such large-scale analysis on its own. The cloud provides the incredible computational capabilities needed for the robot to improve over time. As such, the robot is not required to choose between relying on its own reflexes and a distant brain; rather, it is designed to use both in a powerful partnership.

Hybrid Cloud Solutions: Flexible Cloud Power, Smarter Control

Hybrid Cloud Solutions integrate the strengths of On-Premises (Infrastructure) and Public Cloud. They offer the elasticity applications need as demand increases, control in areas such as security and regulatory compliance when those demands arise, and a less burdensome migration path for modernizing legacy applications.

The ability of Hybrid Cloud Solutions to allow each application to reside in the environment most suitable for its needs, i.e. sensitive or latency sensitive workloads residing closest to users and operations, while utilizing the cloud for burst capacity, advanced analytics and rapid prototyping, will provide the organization with a reduction in lock-in and prevent “all or nothing” migrations which can create business disruption.

One of the key practical advantages of Hybrid Cloud Solutions is the ability to achieve better operational control. Centralized policy management can govern Identity, Access, Encryption, and Compliance across all environments while retaining local systems to maintain performance and continuity. Additionally, Hybrid Cloud Solutions enable the creation of Resilient Architectures: in the event of limited connectivity, critical services can operate locally while synchronizing data and logs to the cloud once connectivity is restored. Many organizations find that this hybrid model aligns better with the budget and operational constraints than either a purely cloud or a purely on-premises model.

In robotics and automation, Hybrid Cloud Edge Robotics clearly demonstrates why hybrid models matter. The robot requires immediate response to perception, safety stop, and motion control commands – work that must remain at the edge. At the same time, Hybrid Cloud Edge Robotics leverages cloud-scale computing to train, simulate, and analyze fleets, thereby improving models over time. Hybrid Cloud Solutions enable users to keep real-time inference near the robot while leveraging the cloud to retrain vision models, use a digital twin, and coordinate updates across multiple locations. This balance of low latency and continuous learning is the essence of Hybrid Cloud Edge Robotics.

Hybrid Cloud Solutions also enhance the safety of rollouts. In a Hybrid Cloud Edge Robotics deployment, updates can be staged, tested on a subset of devices, monitored for regression, and rapidly rolled back. Data can be filtered at the edge to preserve privacy and minimize bandwidth while the cloud aggregates insights to improve overall system performance – another area of strength of Hybrid Cloud Edge Robotics.

The main advantages of cloud-based solutions are scalability and flexibility, as well as centralized, intelligent control (which Hybrid Cloud Solutions offer, particularly for systems that require both rapid responsiveness and large-scale, scalable intelligence, such as Hybrid Cloud Edge Robotics).

A combination of centralized cloud functionality and local edge computing enables systems to respond quickly without sacrificing the economies of scale associated with the cloud. In this way, time-sensitive, real-time processing is performed at the edge (e.g., robots, cameras, sensors, and machines), while larger workloads (e.g., analytics, model training, and long-term storage) remain in the cloud. By doing so, you reduce latency and improve dependability, and your overall performance remains stable even when the connection to the cloud is poor.

One significant advantage of Cloud Edge Solutions is the ability to enable real-time decision-making. No longer do you have to send all the raw video, telemetry, and/or LiDAR streams to a remote area and wait for them to be processed and then sent back; instead, the edge node can perform inference, filter data, and/or trigger action based upon events/conditions/etc. occurring at the edge. Cloud Edge Solutions can also significantly lower bandwidth costs since you only transmit what is necessary (e.g., events, anomalies, summaries, etc.) and/or compressed versions of the original information.

For companies concerned about maintaining data confidentiality, Cloud Edge Solutions lets you keep your data on-site while still providing centralized governance, a dashboard, and/or insights into your entire fleet.

In robotics, Hybrid Cloud Edge Robotics is a strong example of how to implement this concept in practice. Because robots require millisecond response times for safety stops, navigation, and motion control, they are well-suited to the edge execution paradigm.

However, Hybrid Cloud Edge Robotics also relies on cloud-scale learning: training better perception models, running simulations, and analyzing fleet behavior across multiple locations. Cloud Edge Solutions provides the glue that makes it easier to deploy consistent services across multiple locations and to push updates into the system without disrupting real-time operations. Therefore, Hybrid Cloud Edge Robotics typically employs edge nodes for coordination and caching and/or the cloud for orchestration and continuous improvement.

Operationally, Cloud Edge Solutions will enable safer deployments and/or greater fault tolerance. In a Hybrid Cloud Edge Robotics environment, you can stage model updates, monitor key performance indicators, and/or roll back quickly if performance decreases. If the network fails, the edge can continue to run critical functions until it can sync again with the cloud – a highly desirable feature for Hybrid Cloud Edge Robotics in high-traffic areas.

To successfully implement Cloud Edge Solutions, standardize your observability across the edge and cloud, implement secure device identification and/or signed artifacts, and/or establish explicit policies regarding what runs where. When done correctly, Cloud Edge Solutions will provide cloud-scale capability with edge-speed – exactly what Hybrid Cloud Edge Robotics requires in order to remain responsive, secure, and continually improve.

The Dream Team: How Hybrid Cloud and Edge Work Together

We have a robot with two “brains”. There is a fast, local brain (edge) for the robot’s quick reflexes and a powerful, remote brain (cloud) for deeper thinking. This is the core concept of Hybrid Cloud Edge Robotics – a dream team that gets the best of both brains. It is possible for the robot to become faster and smarter simultaneously.

The easiest way to see how the edge and the cloud work together is to look at your own body. Your reflexes are your edge computing. If you trip on a curb, your arms will flail out to protect you from harm before you’ve even consciously thought about what you did. That is your local nervous system reacting quickly in order to save you. Once you’re stabilized, your brain (your cloud) has a chance to analyze what occurred and remember it so you won’t trip on that street again.

This powerful partnership allows for a continuous learning cycle. Your robot’s edge computer makes hundreds of instantaneous decisions; however, when something unusual occurs, like a new obstacle, the edge computer recognizes it and transmits that information to the cloud for analysis. The cloud brain analyzes the information, determines the best way to handle the new obstacle, and transmits the decision back to the robot. This hybrid cloud architecture enables the robot to continuously improve itself.

ultimately, this hybrid architecture enables robots to go beyond being simply pre-programmed machines. These robots now operate as dynamic systems that can respond immediately to their environment and become increasingly intelligent over time. Each robot can learn from its experiences and apply that knowledge to improve the safety and efficiency of other robots in the fleet.

Three Places You’ll See Hybrid Robotics in Action Today

The Edge-Cloud partnership combines the speed of an intelligent edge (or local) computing environment to process real-time data with the vast resources of a cloud-computing environment. Together, they can create new experiences for people today, such as cashierless stores, autonomous delivery drones, and automated farming.

There are several ways that this partnership is currently being used to help people, and here are some examples:

- Stores such as Amazon Go use a combination of cameras and other sensors to track customers as they shop. When you select an item to purchase, the cameras and sensors at the edge (i.e., in the store) rapidly transmit data to the cloud for processing. The cloud can then charge your account, update the inventory levels of the selected items, and analyze shopping patterns across all customers who have purchased at the store.

- Agricultural robots are also being used to improve crop yields by identifying and destroying weeds. Each agricultural robot has an onboard camera that uses machine-learning algorithms running on the device’s edge to quickly identify whether something is a weed. If it identifies a weed, the robot sprays it. However, after completing each pass through the field, the robot will send data to the cloud indicating the number of weeds destroyed per acre, and the cloud will use this information to develop a strategy for the next day that maximizes crop yield.

- Delivery drones are also an example of how edge-cloud partnerships are creating a new experience for people. While the primary function of a delivery drone is to deliver packages safely, the edge processor is primarily concerned with rapid reflexes such as avoiding birds, adjusting for changes in wind direction, and responding to other immediate environmental issues. However, the cloud orchestrates routing for the entire drone fleet, ensuring packages arrive on time and minimizing congestion in the skies.

Ultimately, separating the immediate and complex tasks of an edge-based (or local) computing environment from those of a cloud-based computing environment enables both the edge and the cloud to operate efficiently and securely.

What Happens When the Connection Gets Faster? The Role of 5G

The edge handles a robot’s immediate reflexes, but it still needs to ‘talk’ to the cloud to learn, get updates, and coordinate with other machines. Think of this connection like your internet on a bad day—a choppy video call where you miss half the conversation. For a team of robots working together, this communication delay (latency) can slow the overall operation and limit what they can accomplish collectively.

This is where 5G comes in, acting as a massive upgrade to the highway connecting the edge and the cloud. It doesn’t replace the robot’s quick reflexes or its long-term memory; it just makes the conversation between them incredibly fast and reliable. The impact of 5G on edge robotics is that a machine can now send large amounts of data, such as high-definition video, for real-time processing and receive complex instructions in a blink.

It dramatically shrinks that frustrating delay. What does this super-fast connection unlock? Imagine a team of search-and-rescue robots entering a collapsed building after an earthquake. With 5G, they can all stream live video feeds simultaneously to a central cloud “brain.” The cloud can instantly stitch these feeds together into a live 3D map of the disaster zone, identifying survivors and directing the robots as a coordinated team. This level of teamwork, which is critical for solving robotics latency in high-stakes situations, simply isn’t possible on a slower network.