Do you remember searching your phone for “cat” and then having all your cat pictures appear? That is a form of Artificial Intelligence at work. However, do you remember also being able to have your phone automatically identify a specific person in a group photo and suggest that you tag them? That, too, is a form of Artificial Intelligence, but of a very different type.

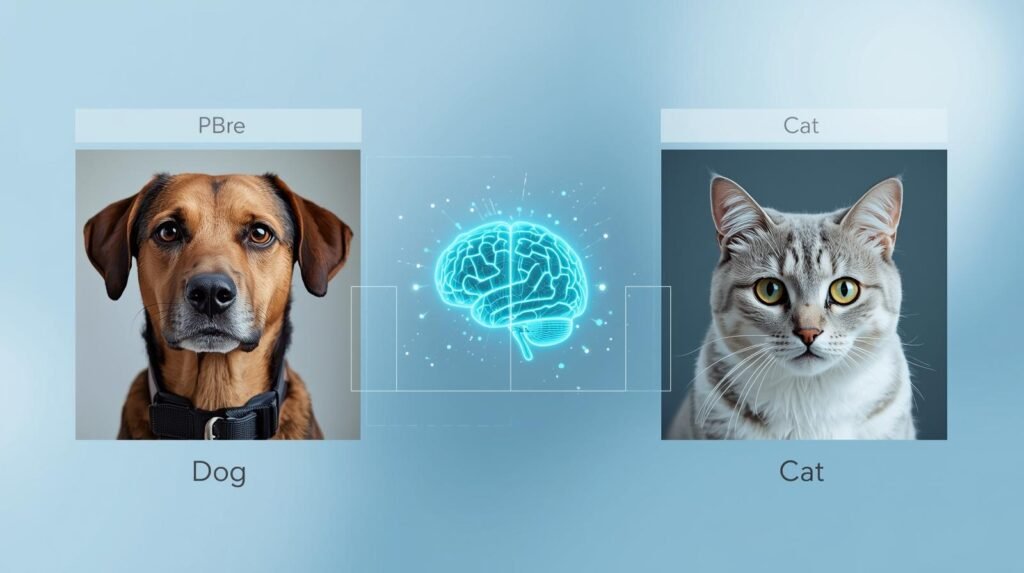

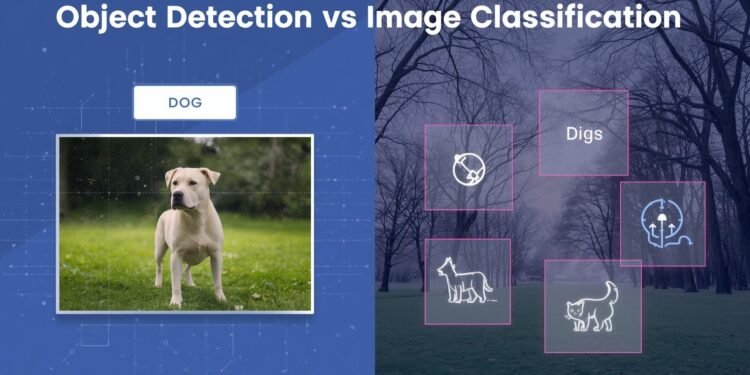

The first (finding all your cat pictures) is an example of image classification. Think of this as simply providing a label to the entire image, such as #beach or #dog. The second (identifying a specific face in a group photo) is object detection, a more complex task that involves identifying one or more objects within an image and specifically locating each item with a bounding box.

The fundamental difference between image recognition and object detection stems from the type of question each seeks to answer. Image classification answers the question “what is the primary focus of the picture?” In practice, this is great for organizing very large photo collections. However, object detection is far more complicated, as it seeks to answer “what objects are contained within the picture” and “where are those objects located within the picture.” Object detection is critical to technology that must engage with the physical world, rather than simply categorize it.

This difference highlights how computers are learning to perceive and comprehend the visual world around us. Understanding the differences between two core methods of computer vision will help explain the technology we use every day, whether it’s for autonomous vehicles or for automatically scanning products at a checkout line.

What is Image Classification? Think of It as Giving Your Photo One Perfect Hashtag

Have you ever been searching through your phone’s gallery for “sunset” and immediately seen every single picture you took that includes a sunset? Then you’ve experienced the power of image classification. At its most basic, this type of artificial intelligence (AI) analyzes the entire photograph and returns a simple decision: What is the picture’s primary subject? Image classification aims to answer the simple question: “What is this a picture of?” by providing a single label for the entire image.

To put it simply, it is like applying a single perfect hashtag to a photograph. For example, you upload a picture of a golden retriever running in a park after a frisbee. The system views the entire scene, determines that the dominant object is the golden retriever, and labels the entire picture as such (“dog”). The system does not see either the frisbee or the trees, only a high-level summary. This type of labeling is very useful for categorizing large collections of photographs into simple categories such as “beach,” “food,” or “cat.” What if an image contains multiple important items, such as both your cat and your dog? In that case, image classification will have to pick which item to focus on; most likely, it will simply pick the first one it finds. More importantly, image classification systems do not know how close together these items are within the image—they only know there is a dog (or whatever) somewhere in the image. That is exactly why a more advanced technique is required to determine the location of objects in an image, whether for tagging friends in photos for social media or guiding a self-driving vehicle.

What is Object Detection? It’s a Digital Treasure Hunt in Your Pictures

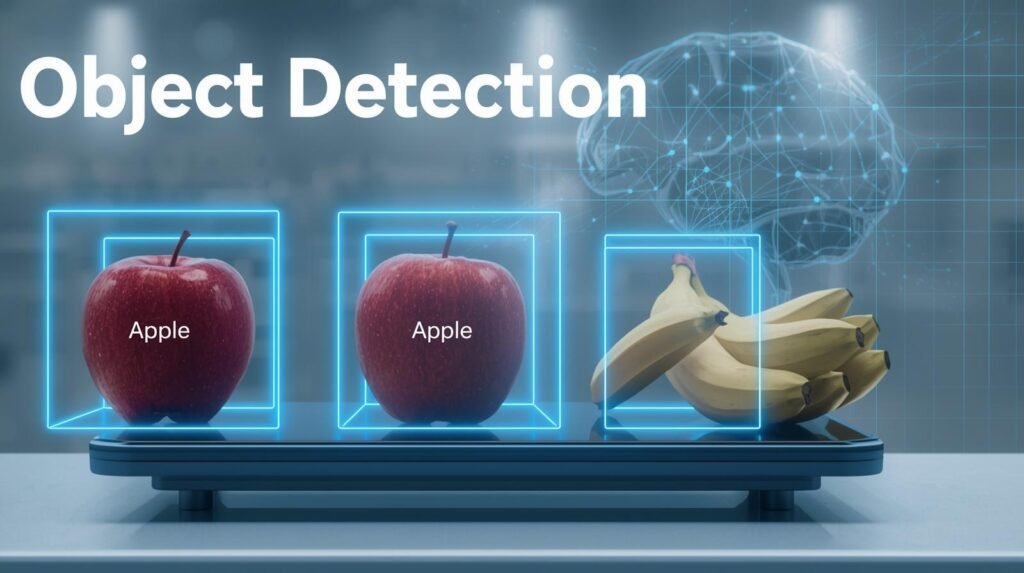

Object Detection is a powerful version of Image Classification. Think of Object Detection like a digital treasure hunt. With Object Detection, you don’t just get a single title for the whole image. You get a list of the individual items of interest in the image. This will allow the AI to identify multiple objects in an image, not just the first or most obvious one. The system does not have to decide whether to call the animal a cat or a dog; it can call it both.

In addition to identifying the objects in an image, the system will need to provide their locations. To accomplish this, Object Detection will draw a rectangular boundary (called a bounding box) around each identified object. Therefore, if you were to take a photo of your pets, Object Detection would detect both the cat and the dog in the image and draw a rectangular bounding box around each, labeling the cat “cat” and the dog “dog”. In essence, the computer is no longer just vaguely aware of animals-it is showing the locations of the specific animals.

The basic image recognition that allows you to identify items in an image and draw a box around them for identification is the key that differentiates a basic image sorting tool from advanced AI software, which identifies images and recommends tagging your friends in a group image. These two approaches fundamentally shift the computer’s interpretation of an image from “What does this picture represent?” to “What items can be found in this picture, and where are they?” Once you have identified both options for categorizing and/or labeling your image(s), you will see that the primary difference is between a single label and multiple labeled boxes.

The Main Difference: A Single Label vs. Multiple Labeled Boxes

The easiest way to understand the fundamental distinction between classification and detection is to see how these two types of systems analyze the same picture. For example, if you were to feed a picture of a cat and dog playing in a yard to both systems, an image classifier will examine the entire scene and then deliver a single, definitive label (i.e., “dog”) that is primarily driven by which object is most visible or prominent in the overall picture.

On the other hand, object detection works like a detailed inventory list. In addition to identifying a single object, object detection identifies all objects in the picture, places a bounding box around each, and assigns a separate label to each. Therefore, as the image illustrates, the output from object detection is a group of responses rather than a single response (e.g., “dog” at this location, “cat” at that location). Ultimately, one type of system provides the story’s title, and the other identifies all the major characters.

How Do Computers Learn to See? It’s Like Teaching a Toddler

The concept of how Artificial Intelligence (AI) can learn to visually recognize images may seem very complicated; however, there are many ways to describe the learning process in simple terms. Learning to identify objects within images can be described in a way similar to how a young child learns to understand the world. A child would not receive a definition of what a car looks like from a dictionary. Rather than defining a car, the parent would show the child examples of different types of cars (large or small, red or blue) and, at the same time, say, “This is a car.” As a result, the child eventually develops the ability to connect the various characteristics that define a car, as well as those of other items.

To develop a similar understanding with computers, developers provide the AI system with millions of images previously labeled by humans. In the Image Classification process, the developer shows the AI an image of a beach along with the label “beach” when the image is displayed. Using Object Detection requires the developer to show the AI an image that has been pre-labeled by another individual (e.g., a person who has placed a box around each pedestrian). In this case, the developer is enclosing each pedestrian with a box and labeling it “person.” The initial stage of preparing the AI to process the labeled images is called Data Labeling. Data Labeling provides the AI with the equivalent of millions of digital flashcards.

The extensive training experience enables the AI to identify patterns in images; however, it does not truly “seeing” them as we do. The AI does not feel the sensation of the sand beneath its feet at the beach, nor does it perceive a vehicle as dangerous. The AI simply identifies pixels and uses the vast number of images it has been trained on to conclude that a certain configuration of color and shape has an extremely high probability (i.e., 98%) of being labeled by humans as a “car.” This is the primary mechanism for developing computer vision systems.

Image classification and object detection difference:

Classification of images: “What is in this image?” Classification of an image typically provides a single (and/or multiple) label for the entire image, i.e., “cat”, “dog”, “car”. The output consists generally of a class name(s) and a confidence level. Classification does not indicate the location of the object(s) within the image; rather, it indicates whether the image contains the object(s) (or likely contains them). Examples of common applications for classification include photo tagging, simple quality control (pass/fail), and medical screening, in which the overall image label is sufficient.

Detection of objects asks: “What objects are in this image, and where are they?” It identifies multiple objects, encloses each object with a rectangle, and assigns a class label and confidence level to each rectangle (e.g., “person: 0.92” at a particular x,y coordinate). Detection is used when the location of the objects being detected is important (e.g., for autonomous vehicles (cars/people), retail shelf inventory (counting items), security (locating people), robotics (grasping objects), etc.) and sports analytics.

Beginning with the fact that localization is a part of recognition, detection is generally harder to do and more computationally intensive than classification, as detection needs different types of training data:

Classification Data: Image + Label(s)

Detection Data: Image + Label(s) + Bounding Box Coordinates for Each Object

In addition to differences in data, there are also differences in evaluation methods: For example, classification is typically evaluated using Accuracy/F1 measures, whereas Detection is typically evaluated using IoU (how well a box overlaps a Ground Truth) and/or mAP (how well an object was located).

A related task to Detection is Image Segmentation, which goes one step further than bounding boxes by assigning a label to each individual pixel, allowing for even more accurate boundaries.

When is Just a Label Enough? Real-Life Examples of Image Classification

You can make large decisions quickly by categorizing a photo with a single tag. For example, when looking for all photos of beaches that you have taken in the past, you don’t need to open each photo to see what type of beach it was (i.e., “ocean”, “lake”, etc.) because you’ve already done this by creating a “beaches” tag. Your cell phone has done the same thing by automatically pulling up all the photos of beaches you’ve ever taken based upon that single tag.

This single-label approach is also the driving force behind many everyday applications you probably interact with in one way or another. As an example, Image Classification is very important for:

- Content Filtering: Automatically going through millions of images on social media to determine if there is anything that could be considered potentially inappropriate for a human reviewer to then review.

- Medical Imaging: Helping medical professionals sort through scans and categorize them quickly (as healthy, or potential anomaly) so they can focus their time on looking at the most concerning ones.

- Shopping Online: The technology that allows you to see only products that have been labeled as “red shoes” or “denim jackets” when using the filter function on an e-commerce website.

In all of these examples, the ultimate objective is to perform at a very high level, sorting items quickly. As such, the system does not need to find each individual item/object; instead, it can make a quick overall determination of the image as a whole. It is therefore ideal for use with large digital collections (e.g., family photographs, product catalogs, medical records).

However, this level of simplicity has limitations. When an autonomous vehicle needs to determine the precise location of a stop sign, rather than simply knowing whether it is on a street, a single label will not be sufficient for that level of detail.

Why Knowing ‘Where’ Matters: Powerful Object Detection Examples in Your Life

The ‘where’ is what makes object detection a breakthrough technology. Where a classifier identifies a photograph as a single, vague category, object detection takes on the role of a thorough inventory person, finding and tagging all relevant items in the image. The ability to identify the exact location of an object enables much greater intelligence than was previously possible, especially for systems designed to interact with real-world objects.

An example of such a system is a self-driving car. For a self-driving car’s computer vision to properly brake at a stop sign, it must know where the stop sign is on the street. An object detection system can provide the necessary spatial awareness by creating a digital “bounding box” for each pedestrian, vehicle, or traffic light found on the road, converting a chaotic street environment into a structured map for the machine to interpret.

You are able to see the same technology that has been shown to be so effective in other settings as well. For example, when the self-checkout machine at the grocery store identifies the apple on the scale—and not the bananas directly next to it—that is object detection at work. Object detection is also used by the photo application on your smartphone to identify individual faces in a group picture before it attempts to identify individuals in the photograph.

Object detection will allow computers to calculate quantities, recognize relationships among objects, and enable real-time interaction. The ability to digitally play “I Spy” at such a high level will have a significant positive impact on both safety and convenience. Is it truly harder for a computer to locate an object, rather than just naming it?

Image Classification vs Object Detection vs Image Segmentation in Different Levels

Three of the most common computer vision tasks include: image classification, object detection, and image segmentation. The main differences among these tasks lie in their predictions and the level of detail within those predictions.

Image Classification (Coarse/High Level – Image-Level)

What does it answer? “What is in this image?”

Predictions: An image-level label (or multiple labels) along with a confidence score(s).

Example: Labeling an image as “Cat” or “Cat + Sofa.”

When should I use it?: When only an affirmative/negative or category determination is needed, and where the location of the subject does not matter – photo tagging, content moderation, basic defect identification, or when a medical image has sufficient information to make a single image-level diagnosis.

Data Needed: Typical: Image + label(s).

Object Detection (Medium detail; Object level + Location)

What question does this answer? “What objects exist in the image, and where are they?”

Output: Set of Bounding Boxes — Each Box has Class Label & Confidence Level. Can be used on images that have many different types of objects.

Example: Street Scene = Cars, Pedestrians, Traffic Lights Etc… all boxed.

Use This: When you want an approximate location, to count things, to track them–Autonomous Driving, Surveillance, Retail Shelf Monitoring, Robotics Pick & Place, Sports Analytics.

Data Typically Needed: Image + Class Labels + Bounding Box Coordinates For Each Object.

Image segmentation (fine detail, pixel-level)

The question this addresses: “Exactly which pixels (in the image) belong to each (object, category)?”

A mask created by labeling pixels. The most common are:

• Semantic Segmentation: Each pixel has been labeled with a class (all “roads”).

• Instance segmentation: Separate masks for each object instance (each car).

Example: Clearly defining the outline of a tumor on an image or the area of the road that a vehicle can drive.

Use when: Object edges are important; medical imaging, background elimination, precise examination of objects, mapping, agricultural (crops vs weeds), and advanced autonomous systems.

Typical Data Required: An Image + pixel-accurate masks (More costly to manually label).

Quick rule of thumb

- Need what only → Classification

- Need what + where (roughly) → Detection

- Need what + exact shape/boundary → Segmentation

Is Finding Objects Harder Than Naming Them? A Simple Breakdown

Image classification is a less difficult task for computers to perform than object detection. Consider an analogy to describe the difference. Classification is like labeling a photograph with a single word or hashtag (e.g., #park). Detection, on the other hand, is like having a pen and being asked to circle each tree, person, and dog in the same photograph. In the former case, classification involves more steps and greater precision than in the latter.

One reason this is so difficult is how we instruct the AI. To classify images using a machine learning model, we simply need to display the AI thousands of images labeled simply (for example, “cat”). On the other hand, if we want to create a detection system, a human would need to manually outline a specific rectangle around every cat in each of those thousands of images. As a result, more detailed training data is required to prepare the model to learn detection, making detection much more challenging from the start.

Ultimately, the job of identifying a particular object (i.e., a car), or more specifically, the “bounding box” that encloses all its pixels, requires much more “thinking” than identifying a person. The system must identify every possible object (in this example, cars), determine the exact x and y coordinates of each object’s bounding box, and label each object with confidence that it is a car. Therefore, it is the increase in complexity from simply sorting photos to the level of processing required by the brains of a self-driving car that differentiates a simple photo sorter from a more sophisticated AI-based system. However, there are many instances where an identified object has sufficient detail for the system to accurately identify it as a specific type, but at other times, the detail is insufficient to allow identification.

Going a Step Further: When You Need to Color Inside the Lines

While a bounding box is sufficient to locate a vehicle on the road, imagine needing to determine its exact shape. An example of when a bounding box may be insufficient is when you’re trying to cut an image of that vehicle from the ad so you can insert it into another ad; a square bounding box would likely include portions of the road and sky, making the final product appear sloppy and artificial. When such high degrees of accuracy are required, computers need more than a simple bounding-box technique.

At this point, we have enough information for Image Segmentation to begin working its magic. With Image Segmentation, instead of drawing a box around an object, you give the computer a digital crayon and tell it to draw in every single tiny dot that makes up the object itself. It then produces a perfect, pixel-level boundary, effectively creating a stencil that separates the object from the rest of the scene. The main distinction between image segmentation and object detection is the transition from a general box to a specific shape.

Chances are you’ve seen this very effective technology in use. If you’ve ever used a Virtual Background on a Video Call, the software had to precisely outline the shape of your body and hair to create separation between you and the physical space behind you (i.e., the room). That precision outlining job is probably one of the most common examples of Computer Vision, as explained here: Image Segmentation.

Classification or Detection: Which Tool Do You Need?

Image classification vs. object detection really just comes down to a conceptual model in your mind. For example, Image Classification (a photo) is like adding a single #hashtag to that photo. Object Detection (a photo) is like creating a treasure map with boxes drawn around each find.

To figure out if you will need to use technology, or if you will be able to complete your task without technology, ask yourself one of these two questions.

- Do you need to know what is in the image? (e.g., categorizing photos by “beach” or “city”) If yes, then that would fall under Image Classification.

- Do you need to know what is in the image and where it is located? (e.g., how many cars are in a parking lot) If yes, then that would fall under Object Detection.

This model removes the mystery surrounding AI that you encounter in your daily life. For example, when your smartphone gallery displays all the photos of your dog, then you have recognized classification. Similarly, when a self-service check-out machine recognizes each item in your shopping basket, you have identified detection in use. In contrast to a computer’s “seeing,” this model provides the practical knowledge of which question(s) it was designed to answer.

Comments 4