Has your cell phone ever shown you photos of beaches in a matter of seconds after you type “beaches” into your phone’s photo search? If so, you may have been amazed by how many of the sandy shores and crashing waves you have photographed were immediately shown to you, drawn from thousands of photos.

It seems almost magical to show you all these images from your simple input of “beaches”. This, too, is not an example of magic — but rather a very sophisticated way for your cell phone to learn about what a beach looks like. The method by which a machine (with no understanding of memories or places) recognizes something as complicated as a beach when viewing a photo will be discussed below.

To a computer, a photo is not a memory or a location — it is an enormous mosaic composed of millions of small, individual colored blocks, called pixels. Your beautiful sunset photo is nothing more than a huge grid of numbers indicating exactly which color each dot was.

The jump from a grid of numbers to identifying a scene is the key to how image recognition actually works. Interestingly, in practice, the method is remarkably human-like. The computer learns, just as a young child does, by being shown many images of cars and other objects that we have manually labeled. As the computer looks at all these examples, it begins to learn the most common features — four wheels, windows, specific shapes — that make up an object. It is this process of the computer learning to recognize commonalities among an object’s patterns that enables AI to successfully recognize images.

This tutorial will explain, step by step, how watch image recognition from an AI point of view learns to identify objects with remarkable accuracy, and uses this pattern recognition to run the applications you use daily. The process by which AI recognizes images is called deep learning image recognition.

This reference guide will walk you through how image recognition processes a grid of pixels as a numeric representation, then uses the basic features of an image (texture, edges, corners) with layered neural networks trained on large, labeled datasets to detect patterns in images. Models trained using these machine learning principles for image recognition will make probability-based predictions to classify and detect objects in images and will be used to drive a wide variety of applications, including medical imaging, driver-assistance systems, and retail.

This guide also examines some of the most common ways the process fails or introduces bias— why the quality and diversity of training data determine how well the model performs —and how this can lead to problems such as misclassifying muffins or chihuahuas. Once you see the end-to-end processing pipeline of an image recognition system, you will better understand what is possible and what is limited about how image recognition technology works.

How Image Recognition Works?

Image recognition is an elaborate process that involves identifying, detecting, or recognizing objects, scenes, and patterns in an image. It is, at its foundation, based on sophisticated computer algorithms and artificial intelligence (AI) methodologies for analyzing visual data. Once an image is given to the recognition system, the image is broken down into its components, and feature(s) are extracted from those components. The feature(s) which are extracted may be one or all of color, shape, texture, etc., that will assist the recognition system in determining which object(s) are contained in the image.

Once the features are extracted from the image, the system will search a large database of known images or patterns to compare them. The comparison of the feature(s) is generally done using machine learning (the system was previously trained on numerous databases of images, so it knows what to look for). When the system finds an image or pattern that matches the one you want to identify, it can add labels to the picture describing the object(s), as well as contextual information about the image.

By using techniques such as deep learning and neural networks, image recognition can become even more accurate. Both deep learning and neural networks can “learn” from past experiences and improve their ability to recognize objects as they see more data. As a result, image recognition technology has been applied in many areas, including social media’s auto-tagging and advanced security systems that can recognize people in real time based on their facial features.

Machine Learning Image Recognition

Machine Learning Image Recognition is a subset of Artificial Intelligence (AI) that enables Computer Systems to identify and interpret what they see in their environment, using an assortment of Algorithms and Techniques. In addition to identifying patterns, objects, and Faces in Images, this Technology is used by numerous Industries to Automate Tasks (Automotive and Healthcare are just two examples) and by Social Media Platforms and Smartphone Applications to enhance the User Experience.

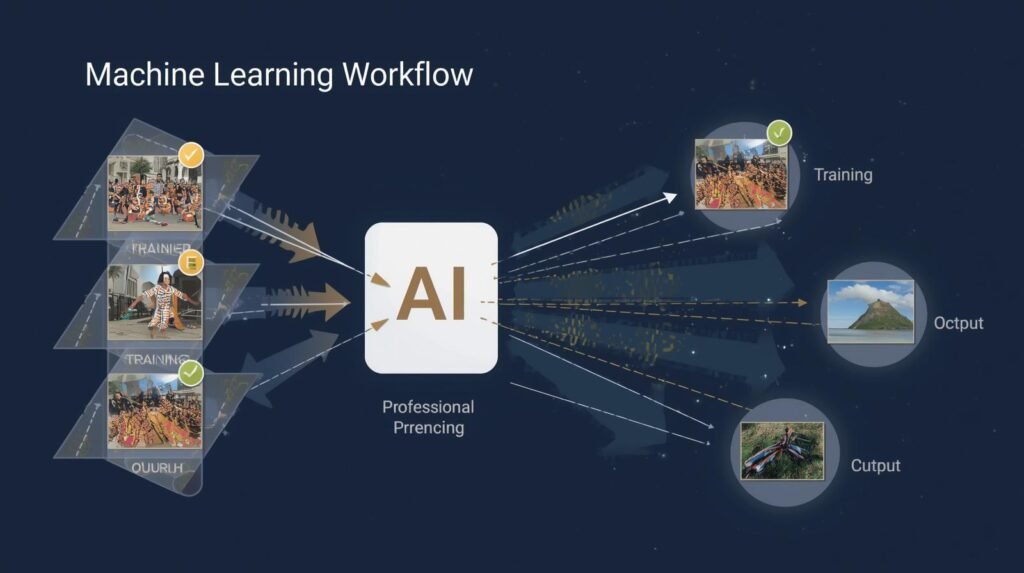

Essentially, the first step in the process involves feeding the machine learning model a large database of labeled images from which it can learn. As the model evaluates additional images, it will continue to improve at distinguishing one category/class of objects from another (e.g., dogs vs. cats or brand logos). Machine learning image recognition is designed to ultimately give computers the ability to correctly predict when exposed to new, unclassified images, increasing their value and usefulness across all types of applications.

Deep Learning Image Recognition

Deep Learning Image Recognition is an area of Artificial Intelligence (AI) focused on training AI systems to both see and interpret images in a manner similar to how humans do. The field relies on large amounts of data (images), using advanced algorithmic techniques that are specifically developed to handle image-based data as input, for the purpose of enabling AI systems to “see” objects, faces, patterns, and other characteristics within still images or video.

The primary way this happens is by providing a neural network with a large amount of labeled image data, a simulated computer system modeled on the organization of the human brain. Once trained, the neural network can detect many of the characteristics and features in the images used during training. Through this process, the neural network improves its ability to detect and classify images it has never seen before.

Deep learning image recognition is making an impact across many fields, and as technology advances, its applications will continue to grow. It can be used in healthcare to diagnose diseases by analyzing images such as X-rays and MRIs. Businesses are using image recognition to track customer behavior and give personalized shopping experience. Image recognition is also being used to advance self-driving cars. Self-driving cars will need to recognize road signs, people walking, and other vehicles. Therefore, image recognition is creating new ways for us to interact with our computers and paving the way for the next generation of artificial intelligence.

How Does an AI See an Image? It Starts with a Million Tiny Dots

When you quickly view a photo, your brain instantaneously identifies people, places, and objects. However, an artificial intelligence views an image much differently than we do. A computer does not recognize a single image — as we see it — but rather a vast mosaic comprised of millions of small individual dots of color. This is the beginning of a method that converts our visual perception of images into numerical representations, thereby illustrating how image recognition operates.

Look closely at the image of the apple above. You can see each colored square forming the shape of the apple. Each square is called a pixel and represents the smallest unit of a digital image. Every photo on your smartphone and every image on the Internet is actually a grid composed of hundreds of thousands or millions of the aforementioned tiny, colored squares, all arranged so that your eyes see a complete picture.

Here’s when you see how the magic turns to math. Unlike abstract concepts like ‘an apple’, computers are very good at understanding and manipulating numbers. Computers turn the entire picture into a giant grid of pixels, with each pixel assigned a unique number or combination of numbers to indicate its exact color and light intensity. That lovely sunset? An AI sees it as nothing more than a spreadsheet.

That massive grid is the starting point for the AI’s identification of the photo’s contents. Since the AI does not possess vision but instead uses data, this is the first step in the AI’s true work: finding patterns in the sea of numbers.

What Are the First Clues an AI Looks For? Finding Simple Patterns

Beginning with the conversion from an image to a digital spreadsheet (or grid of numbers), the AI has a second problem to solve: converting this spreadsheet into usable information. To accomplish this, the system acts as a detective: instead of considering the entire picture, it focuses on identifying the most basic signs or evidence in the data.

The system initially searches for basic patterns in the data during its first pass over the grid of numbers. Instead of trying to identify a “face,” or a “tree”, etc., the system simply tries to find sudden changes in the numerical values represented by each pixel. When the system finds such sudden changes, those represent an edge. When two or more edges meet, they form a corner. When it identifies a group of pixels with the same value, it represents a color patch. Artificial intelligence pattern recognition is largely concerned with identifying simple geometric shapes, the very simplest elements of any image.

The first critical step in identifying the basic building blocks from raw pixel data is called feature extraction. Feature extraction can be thought of as AI developing a simplified “map” of the image: instead of millions of pixels, it now has a map of the image’s salient lines, curves, and texture characteristics. What exactly is feature extraction in images? Feature extraction is simply the translation of raw data into usable data – one single, easy-to-understand clue at a time. In fact, feature extraction is one of the earliest and most powerful techniques in image recognition.

A series of lines and corners won’t create a cat. These features are much like individual LEGO bricks; while they are essential on their own, you need instruction on how to assemble the simple pieces together to identify a whisker, an ear, or a tail. Thus, the AI will have to go to school to learn to assemble individual LEGO bricks using a technique called a massive set of digital flashcards.

How Computer Vision Works?

Computer Vision is an exciting subset of AI that focuses on how machines understand and interpret visual information in the world around us. The development of algorithms and models that enable computers to identify objects, people, and other elements in images and video is the basis of computer vision. Acquiring visual information using cameras and/or sensors is typically the first step. Subsequently, the captured visual data is preprocessed to improve quality and remove noise, yielding a cleaner, more usable dataset for analysis.

Following preprocessing, the second step most often involves feature extraction, in which the computer identifies important aspects of the images for identification. These features may include edge, shape, and texture, and allow the computer to understand and recognize what it sees. Deep learning is also widely used for feature extraction, employing artificial neural networks that can be trained to identify object types (e.g., animals, vehicles) from large sets of labeled images. Once trained, the network can accurately predict the locations and presence of objects in images that it has not seen before.

Computer Vision systems will use this prediction data for several applications. One example is Facial Recognition Technology (FRT). FRT uses computer vision to identify and verify individuals based on their facial characteristics. Computer Vision also aids autonomous vehicles. The vehicle will recognize traffic signs, pedestrians, and other vehicles as it drives, enabling it to follow the rules of the road.

Medical Imaging is another area where computer vision has been applied. Computer Vision can aid doctors in diagnosing disease by analyzing X-rays, MRIs, and CT scans. Quality Control in Industrial Automation is one last area where computer vision has been applied. It allows companies to monitor production early and reduce waste and errors by examining items as they move along an assembly line. Overall, computer vision is the integration of modern technology and advanced algorithms that will close the gap between how humans perceive and understand visual information and how machines do.

How Do You Teach a Computer What a ‘Dog’ Is? The Digital Flashcard Method

A device learns to recognize objects through a huge library of digital flashcards. The way to train a computer to recognize dogs isn’t by writing a complex program to tell it to look for “fur” and a “wagging tail”. Rather, you would show thousands of pictures of dogs (and other things), each labeled by people (e.g., “dog”, “car”, “tree”). The process of collecting and labeling all these photos is known as data collection and labeling, and it is the initial step in training an image recognition model.

Once the AI has access to this image library, it can begin working on its homework. It is highly detailed, as it analyzes each image labeled “dog” and then cross-checks it against the simple maps of lines, curves, and textures it previously created. As the system continues to study these images, it begins to identify patterns and connections. Specifically, it identifies that most images are labeled as dogs because they feature a mix of furry textures and typically include a triangular shape above each ear and a dark circle for the nose.

The system has not developed an understanding of “dog” as humans do. Rather, the system has established a statistical recipe of visual characteristics which, when viewed collectively, will highly likely indicate that an image was labeled “dog.”

The end product of these processes is models—digital brains—engineered to perform a particular function (for example, recognize what they have been trained to identify) by using the vast amounts of knowledge compiled during training. This knowledge is represented as statistics linking various features to their corresponding labels. In other words, once an enormous amount of information is sifted through and the underlying patterns are defined, a large collection of data is distilled down into a single, specialized tool, which is the model itself.

With your finished model, you have completed the study phase, and the AI is ready to move on to graduation – from the flashcard study phase to actual testing. At this point, the AI will be given a completely new photo it has never seen before and asked to make a prediction about it. So, how does the model really take all the clues from the new picture and come up with a confident answer, such as, “I am 94% sure that is a dog?” The model does this by layering all its knowledge into a layered approach – much like a team of experts working together to solve a puzzle – and this layered approach is critical to how image recognition operates.

How Does the AI Make a Final Guess? Thinking in Layers

The model does not simply view the new image and automatically identify what is in it; instead, it utilizes a series of steps to arrive at a solution through a multiple step approach which is quite similar to a 20 questions format, whereby each response to each question (in this case the responses from previous “questions” help determine the answers to subsequent ones). In many cases, the term neural networks is used to describe systems like this; however, they can be thought of more simply as a production line or an assembly line of experts, each adding their own information to build on the work of the previous experts.

This multi-layered process is why an AI can move from identifying straight lines to identifying a complex object. Think of this as a new image being sent to a computer in order to identify whether that image shows a human face. Each layer asks increasingly complex questions as the data passes through it.

- Layer 1 (Line Detecting Experts): This first layer identifies only the most basic components. These experts report their identification of the basic elements, such as “There’s a sharp vertical line here”, “I’ve identified a slight curve”, and “That is a small circle and dark”. They send these basic findings on to the second layer.

- The 1st Layer (Shape Builders): This group does not simply follow straight lines; they use information from the previous layer to identify patterns. They may decide, “A combination of the curves gives us an oval,” or “Two circles that are very dark appear to be eyes.”

- The Final Layer (Decision Maker): The manager at the top of the organization will receive all the reports and make their own decision based on what they see. Based on reports of an oval shape, two circular/eye shapes, and one nose shape, they have determined that there is a 95% chance the image depicts a face.

Notice that the final answer was not simply yes or no. It was actually a confidence score—a probability. The model does not have absolute certainty; rather, it estimates how likely a new picture is to be an example of the kinds of pictures it saw during training. This is what makes technology so error-prone. For instance, when a photo tagging application labels a Cabbage Patch Kid doll as a real person, it is because the doll has enough similarity to the ‘face’ model’s feature representation that the model makes an educated guess with high confidence, even though it is wrong.

In less than one second, all of these processes occur — from pixel analysis to the creation of the final probability. It is this layering and statistical analysis that allows an AI to see and analyze a world it has no understanding of. However, identifying a single item is only the first step in a much larger task. The real question now is, how does the AI identify the total scene? Will the AI recognize a picture as simply being a “beach photo”, or can it determine whether a boat is present on the beach? This difference represents a major leap forward in artificial intelligence.

Object Detection in Images

Object detection in images is an important subfield of computer vision. The main objective of object detection in images is to identify and locate all the objects that may appear in an image. Algorithms and machine learning methods are used to train computers to detect and classify objects by type (e.g., person, dog, car) and other common objects. This capability is necessary to support several potential applications, including autonomous vehicles, video-based security monitoring, and image tagging on social networks, where the ability to quickly and accurately identify objects will help users better understand what they see.

Object Detection primarily uses CNNs, which are very effective at extracting features from images. After learning from thousands of images in the training dataset, the neural network can be used to classify new images, detect objects, and draw a bounding box around them. Advances in this area have enabled the development of new, more efficient detection algorithms. More efficient algorithms enable real-time detection. Real-time detection enables a system to process a live video feed and respond to events in its environment. Object Detection is constantly evolving due to ongoing technological and research innovations.

Is It a ‘Beach Photo’ or Is There a ‘Boat on the Beach’? A Crucial AI Distinction

The question you’re asking (the difference in scene and objects in that scene) gets at the essence of two distinct, yet complementary, types of abilities for artificial intelligence. The most basic type of ability is image classification. This is placing a title across the entire image. When your smartphone organizes photos in your photo gallery into albums titled “Sunset,” “Food,” or “Pet,” it uses classification to place a single high-level category or theme onto each individual photograph. The Artificial Intelligence took an overall view of the picture and then drew a conclusion based on what it saw.

But sometimes, a single label isn’t enough. That is when the more developed ability to identify objects in images comes into play. Rather than simply asking, “What is this a picture of?” object detection in images asks, “What specific things are in this picture, and where are they?” This ability to detect objects in an image allows for a more detailed search of all items within the image, then places an invisible box around each item it identifies, with precision as to where each item is located (that is, a person is here, a car is here, and a dog is there).

You have likely seen this useful application in use. Social media sites often suggest tagging a friend in an image by detecting the face and isolating its location. Similarly, when fun camera apps add virtual glasses to your head, they can do so because the app first detects the exact locations of your face, eyes, and nose in real time.

In effect, classification is about assigning a title to a book (the classification), whereas detection is about creating a table of contents in the same book (the detection). The leap from identifying a scene’s theme to pointing out who is in that scene is an important first step in this process. Understanding how to build classification and detection models has enabled many new technologies that are influencing how we interact with the world, far beyond our smartphones. Thus, understanding how image recognition works is necessary on multiple levels.

Where You See AI Vision Working in the Real World (It’s Not Just Your Photos)

This ability to recognize specific images is not limited to organizing the family photo album; this technology is also driving applications that save time, money, and potentially lives. As we’ve seen, the ability to recognize the faces of friends and family has moved beyond our personal devices and into various industries.

For example, in health care, image recognition provides radiologists with an extra set of eyes when reviewing the numerous medical scans they must review every day. When an AI has been trained on millions of medical scans, it can identify subtle abnormalities on X-rays or MRIs that may indicate an emerging disease. In this example, the AI does not replace the doctor; it serves as a second pair of eyes to help the doctor identify potential issues faster and more effectively, leveraging deep learning for image recognition.

The “see and react” approach that enables the emergency braking of pedestrians stepping into roads in many modern cars is based on the same concept as a camera system assisting a driver to remain within the center of their lane by continuously monitoring surrounding objects, lane markings, and signs for quick decisions related to safety. Even this type of technology is now being used in retail to enable cashless shopping, with cameras that recognize each item you place in your shopping cart.

However, there is a major problem with using such advanced technologies to make life-or-death decisions – their error rate. As great as they are, no matter how advanced the technology may be, it has flaws; therefore, an important concern remains: What happens if the computer misreads the image? Just like the most intelligent AI can make mistakes at times, and in doing so create situations that result in confusion — and, in certain cases, potential serious consequences — relating to AI image recognition.

Why Your Phone Thinks a Muffin Is a Chihuahua: When Good AI Goes Bad

What do we find out when those systems fail? Sometimes, they will simply produce something that makes no sense at all. We have probably all seen the popular Internet meme comparing a blueberry muffin to a chihuahua face—personally, it is easy to tell the difference. However, to an AI trained to recognize a “small, roundish object with dark spots,” the primary elements in both images are actually very similar. This is not because the AI is “confused,” as a human might be; it is simply doing the best it can given the limited visual evidence it was trained on.

Most often, the reason for these errors lies in how the AI was trained — in other words, which training (or educational) data was used. Since a model’s performance in identifying images depends on both the quality and diversity of the flashcards used to train it, if you have only shown the model pictures of cars on sunny days, it may have difficulty recognizing a car covered with snow. It is not about making the image recognition model smarter; it is simply about providing the model with a more varied, richer collection of examples to learn from.

When data includes human biases, the challenges for facial recognition technology are even greater. A system trained on photos of predominantly lighter-skinned individuals will not perform as well at identifying people with darker skin. The system did not intentionally exclude the darker-skinned individuals; however, it is also not going to do an adequate job of identifying them because of its own lack of experience (or ‘training’) and bias (the same type of bias present in the data provided to it) regarding the representation of the world.

These failures illustrate the basic truth about the system: it does not see a “face” or a “muffin”. It sees only a grid of numbers and uses patterns learned from past experiences to statistically infer what something probably looks like. Recognizing this fundamental difference provides you with a new level of understanding and clarity regarding how machines see and interpret our world.

You Now Have a New Superpower: Seeing the World Like an AI

What once seemed like pure digital magic has evolved into something you can visualize. Previously, a phone that could find every picture labeled “beach” in your photo gallery was a great accomplishment, but it was also a mystery. Today, you are able to comprehend the process (and the logical sequence) that occurs within seconds to transform the mosaics of color made up by the billions of pixels in each image into the simple patterns of lines and texture that ultimately result in a single, final conclusion or guess: “That’s a beach.”

You’ve learned how this type of image recognition learning takes place through millions of “digital flash cards,” and how multiple layers of digital “experts” work together to convert those patterns into a single, definitive conclusion: “That’s a beach.” Understanding how image recognition learning processes work helps you appreciate the complexity of AI, which spans many disciplines.

With this new perspective of how technology works, each time you use your banking app to deposit a check or open your phone with your face, you will be able to see the same process that is working for you. What was once simply “magic” is now understandable as a methodology.