“Imagine building an entire assembly line for a large robotic project. At the end, you find out that there is one part missing. That part, a $1 Million, two-ton robot arm, cannot be installed because it does not fit properly. If the robot is misaligned by even a small amount, the arm will fail catastrophically. If the failure occurs during operation, it may also damage equipment or harm workers.

Testing the robot in physical environments to ensure everything works as planned has been a long-standing challenge. Testing each new version of the software requires multiple, time-consuming tests on the production machine, which slows the development process.

Not only is this process very expensive, but it also creates real-world risks to people and property. A software error does not just terminate the program — it causes the robot to operate erratically. Erratic operation could damage the equipment (e.g., the robot) or the operator. What if you could simulate all of the processes involved in testing a robot, including breaking the robot, a hundred times without spending a single dollar?”

Summary

Virtual robotic testing utilizes an exact digital model of a robot and its operating environment – called a “digital twin” – which has a high level of accuracy in replicating its physical counterpart to execute the same control software as the physical device, however, in a safe simulated environment prior to utilizing actual hardware. This process eliminates significant barriers to developing robotics by reducing reliance on trial-and-error and on expensive, time-consuming physical trials.

One of the largest advantages of using virtual robotic testing is cost savings: mistakes that could have caused significant repair costs, unnecessary material waste (paint, welding wire, etc.), and production losses due to downtime are essentially eliminated when testing occurs in a simulated environment. In addition, the speed with which tests can be completed via simulation allows developers to quickly evaluate a wide range of options for optimizing path optimization, cycle time, and task sequence. Teams can now use offline programming to develop and prepare robot code before the physical robot arrives, reducing on-site setup time.

In addition to cost savings and accelerated development, virtual robotic testing offers a major advantage: safety. Developers can repeatedly simulate catastrophic events such as collision, emergency stop, and other rare failure conditions without placing personnel or equipment at risk, especially for collaborative robots used in environments where they will operate alongside humans.

The article also provides examples of current use of virtual robotic testing in automotive manufacturing, large e-commerce distribution centers, and NASA’s Mars Rover program. The article concludes by stating a major limitation of virtual robotic testing: the “reality gap.” While virtual testing can significantly reduce the physical testing required to achieve acceptable performance levels, it cannot eliminate the need for some physical testing.

Virtual Robot Testing

Virtual robot testing refers to testing robotic behavior in simulation before (or at the same time) testing it in the physical world. Using virtual robot testing, teams can evaluate robot design, motion paths, and control methods without damaging their robots, incurring downtime, or damaging tooling. Virtual robot testing can be highly beneficial for teams with expensive prototype tools, limited lab access, or a rapid prototype change process.

The first step in the standard workflow for virtual robot testing is to create digital models of the robot, its cell or operating area, and all other relevant equipment it interacts with, including but not limited to grippers, conveyor belts, fixtures, and safety zones.

The second step of virtual robot testing is to create scenarios to validate that the robot has enough reach, clearance, cycle time, and collision avoidance. A good simulation will also include sensor models and noise, allowing teams to model how various sensors operate (e.g., camera-based vision systems, laser-based LIDAR systems, force-feedback systems) under different conditions. Therefore, virtual robot testing enables teams to identify “it works in an ideal world” issues earlier than they would in actual testing.

The primary advantages of virtual robot testing are cost and time savings, enabled by a faster development and testing process. In addition to speed, another advantage of virtual robot testing is that it lets you test your application across dozens of settings and configurations in minutes, rather than waiting for a time slot on a physical robot. As an example, you can use virtual testing to experimentally determine the optimal speeds, accelerations, and constraints for navigating a particular path.

This means that you will have a much better chance of developing a robust application that works well with all types of objects and environments. Additionally, because there are no physical robots involved in the testing, you do not have to worry about expensive repairs when something goes wrong during the testing phase.

Finally, virtual robot testing provides an opportunity to “stress” your application and discover how it behaves under extreme conditions such as occluded markers, glare, changing payloads, tight tolerances, and obstacles. Testing your application under these conditions before it is released on the shop floor will help you to identify potential problems and develop fixes before they cause problems. Another way to describe this process is “operator training.” Virtual robot testing simulates a robotic system’s operations, allowing operators and maintenance personnel to practice operating and maintaining it without the risks of operating a physical robot.

Robot Simulation: Test Robots Virtually Before Real-World Deployment

Robot simulation allows teams to test how a robot will behave in an exact replica of their production area (factory), warehouse route, or customer location using a simulated digital environment, prior to physically placing it there. By modeling a robot, its tools, and its environment, and then running realistic simulation tests, teams can identify what works well, what does not, and what needs improvement. Robot simulation is typically used alongside virtual robot testing, which uses automated test cases to evaluate robotic performance, safety, and reliability well before actual hardware is damaged.

A comprehensive industrial arm robot simulation package should include three-dimensional geometry, kinematic analysis, and physical simulations that replicate the robot’s motion under real-world conditions. The most important benefits of industrial arm robots are verifying reach, cycle time, joint limits, and safe path avoidance to avoid collisions with fixtures, people, and other objects.

In contrast, mobile robot simulation packages should provide representations of maps, obstacles, sensor noise, and traffic flow patterns to help teams evaluate navigation and recovery behaviors. If virtual robot testing is combined with robot simulation testing, teams can easily automate testing by creating test cases that run the same tests repeatedly for each software change, ensuring no regressions occur.

The largest advantage of robot simulation is the ability to quickly test many aspects of your experiment at the same time. While normally you would need to wait for laboratory space to be available to conduct these experiments, you can now run them all at once, even to include “what if” changes to layout, payload, or camera position.

Robot simulation is therefore best suited to early-stage design issues, such as selecting a gripper type, limiting acceleration rates, or establishing an optimal workcell configuration. Additionally, using virtual robot testing to validate results of the experiments allows you to establish a set of repeatable tests — for example, “no collisions,” “completion of task within 35 seconds,” or “success rate > 99% over 1,000 runs.”

Robot simulation also offers additional advantages through cost and safety savings. Physical system failures can damage equipment, waste components, and create unsafe conditions. Robot simulation minimizes the risk of failure by identifying potential physical-system faults—for example, collisions with other objects due to inadequate clearance, loss of stable grasp, or insufficient sensor coverage—prior to deploying the system in the real world. Robot simulation is also an effective tool for operator training; operators can practice both normal operating procedures and fault recovery without stopping production or putting individuals at risk.

To maximize value from robot simulation, start by selecting a single mission-critical process (e.g., pick-and-place, palletizing, inspection, or autonomous delivery). Establish a series of measurable criteria for determining success, and then use virtual robot testing to provide complete coverage of possible scenarios. Once the physical robot is deployed, robot simulation and virtual testing will have already demonstrated that it functions reliably and consistently across varying conditions.

Your Robot’s Personal Flight Simulator: What is a “Digital Twin”?

Simulators are digital models of new aircraft that simulate how they respond, allowing pilots to safely test their skills before flying a real aircraft. In addition, robotics engineers use this technology as a Digital Twin. The Digital Twin is a fully detailed 3D model of a robot inside a computer. This way, engineers can conduct as many tests as possible on the Digital Twin without risking damage or loss of the actual robot.

To be successful, a Digital Twin must include more than just the robot model. The entire future work area for the robot must be recreated in detail. A Digital Twin of a robot that will eventually operate in a warehouse must include all aspects of the warehouse. This means the robotic arm, the shelves it will need to access, the boxes it will need to pick up, the conveyor belts it will need to interface with, and so forth. By recreating the full scope of the robot’s operating environment, each test can replicate the conditions under which the robot will operate.

The main advantage of using a Digital Twin is that the same programming language (the “brain”) used to run the robot physically also runs the Digital Twin. Because the two environments are connected with high fidelity, engineers can identify and solve problems before the cost of failure. This provides an opportunity to engineer and test innovative solutions without financial risk.

How to Build a Robot’s Virtual Playground

Creating the virtual version of the robot begins with a detailed 3D model of the robot and its operating area (the digital plan). All of the robot’s joints, screws, and wires are made exactly as they are in the real robot. Additionally, the virtual model includes all factory floor, conveyor belts, and other obstacles the robot may encounter. Designing a realistic virtual environment is much like creating the virtual environments that we see today in video games.

However, having an exact replica of the robot visually in the virtual space is not sufficient. The virtual environment must mirror real-world behavior. The physics engine plays the role of “rule book” for the virtual environment by providing the rules of the game, such as gravity, friction, and momentum. This is why when the user drops a virtual robotic arm, it does not go through a solid steel table, and why a virtual box falls over when dropped. If the physics engine were not used, the simulation would simply be a picture and not a true testing ground for engineers.

By combining a fully accurate virtual representation of the robot with the physics engine (the rulebook), the simulation becomes highly reliable. Engineers can have complete confidence that if a test succeeds in the virtual testing environment, it will succeed in the real-world environment. This confidence enables companies to solve problems across everything from automotive manufacturing on Earth to robotic exploration on Mars.

Benefit 1: Test Without Breaking the Bank

A robot’s arm crashing into equipment because it swung too wide can be a zero-cost error when simulated digitally, and can be fixed with just a few clicks. On a manufacturing floor, however, this can be a $100,000 repair bill. The possibility of making mistakes, such as the robot swinging wide and being able to crash, learn, and reset without penalty, provides the most significant benefit to using robot simulation and will save companies from the huge expense of catastrophic errors.

Additionally, unplanned downtime is equally devastating. As an example, if a key robot on an assembly line stops working due to an unanticipated failure, then the entire production line will stop and lose money at a rate of thousands of dollars per hour. By using virtual commissioning for automation, companies can develop robot programming before the robot’s first touch on the factory floor, thereby avoiding time and cost losses from a single equipment failure.

Finally, virtual testing reduces waste. Physical tests of robots operating in processes such as welding and painting generate waste from all materials used during each test run, with the items tested (paint, etc.) discarded after each mistake. Virtual tests do not consume any physical materials; therefore, they produce no waste. This is a significant method for reducing robot development costs and is fast.

Benefit 2: Work at Light Speed by Testing Thousands of Scenarios Overnight

Time progresses at a different rate within a digital twin than it does with a physical robot. A robot must follow the rules of physics, whereas a simulation can be run much faster with increased computational power. Similar to a video being played back at a faster-than-normal rate, what may take an actual robot ten minutes to complete can be completed in a few seconds in a simulation environment.

Because simulations can run at a much faster pace than real-time, engineers can run thousands of potential solutions or methods of completing a task overnight. Engineers can find the method that is most productive (efficient) for a specific task and reduce the amount of time spent to accomplish that task by as little as seconds; after one year of continuous operation of the robot, these savings can total days of productivity.

The increased speed of running simulations gives the engineer an enormous advantage. Using a technique called Offline Programming, engineers can develop the robot’s entire sequence of actions, test them, and refine the instructions before delivering the physical robot. When the physical robot is finally installed, the optimal sequence of actions will be ready to be programmed into the robot. In addition to saving the engineers the weeks it would have taken to program the robot on the shop floor, the robot will begin working sooner than if it had been necessary to spend those weeks on the shop floor.

Benefit 3: Practice for Disaster in a Perfectly Safe World

If a robotic arm in a car manufacturing plant swings too hard, or if a programming error makes a robotic warehouse worker collide with a shelving unit, then in reality, those mistakes cost money, and in some cases cost lives. It would be impossible for an engineer to test all of the things that could go wrong when a robotic device crashes. However, within its digital twin, which has no negative consequences, engineers can test all possibilities of failure (and must).

The simulation model that runs the digital twin is a physical simulation based upon digital physics, similar to a video game. A video game does not allow a player character to pass through solid walls because of collision detection. Simulations are run using the same collision-detection principle. The digital twin knows exactly where and how big every 3D object is, including its own arm and a nearby conveyor belt. Therefore, engineers can simulate a crash or an emergency stop 1,000 times and analyze each outcome without damaging the actual machinery.

In fact, this rigorous safety testing is required for robots to leave their “cages” and work alongside people. Safety is the primary function of collaborative robots (“cobots”), and by demonstrating that a robot will automatically and safely come to a halt if a person comes too close, manufacturers can develop workplace environments where humans and machines collaborate to complete complex tasks.

Simulation Tools: Digital Tools That Make Robot Testing Safer and Faster

Simulation tools help engineering teams design, validate, and refine robotic behaviors in a virtual environment before deploying on hardware. The use of simulation tools enables evaluation of the robotic system’s motion, perception, and decision-making in controlled environments. This reduces risk and accelerates development. Combining simulation tools with virtual robot testing simplifies the identification of potential issues early and enables more efficient verification of solutions.

At a minimum, simulation tools will replicate the robotic system, its workspace, and the tasks it performs. For example, for an industrial robotic system, simulation tools would likely replicate the entire robotic cell, including conveyers, fixtures, parts, and safety zones. A mobile robotic system would be simulated to represent maps, obstacles, traffic patterns, and varying levels of illumination. Replicating the robotic system’s environment is important to ensure repeatable virtual robot testing. If the environment cannot be replicated, the test results may not be reliable for measuring progress.

A major benefit of using simulation tools is the ability to safely test the “failure on purpose” of robotic systems. You can test the robotic systems at high speed, induce sensor failures, add unanticipated obstacles into the environment, simulate incorrectly aligned parts, etc., all without the risk of colliding or damaging any of the equipment. In virtual robot testing, edge-case simulations are converted into test suites that run whenever the code changes. Therefore, while teams demonstrate that their robotic systems operate once, they also show that they remain stable as their software evolves.

In addition to speed, a key benefit of simulation tools is the ability to test your robot virtually. This allows teams to experiment with iterations without being tied to the robot, without production downtime from robotic hardware requirements, or without the need to physically move the robot.

Simulation tools enable you to simulate various scenarios, such as different paths, different grasp points, or different navigation parameters, and compare the results of each in a matter of minutes. The results from these virtual experiments can then be treated as automated regression checks, helping prevent “it used to work” issues when the robot goes into commissioning.

A good simulation tool should include key features: physics and kinematics, collision detection, sensor simulation (camera, LiDAR, etc.), and logging to identify why a particular run succeeded or failed. In addition to providing the above functionality, integrating simulation tools into your overall workflow is equally important. A good simulation tool should provide APIs and scripting capabilities, or integrate well with common robotics frameworks, to enable communication with the real-world software stack. If you have a pipeline in place, virtual robot testing can run continuously overnight, generate metrics (e.g., success rate, cycle time, near-collision counts), and identify risk areas.

The first step in adopting simulation tools is to identify an area for improvement, define what constitutes passing or failing in this workflow, and add workflows that represent the range of conditions you expect to encounter in the real world. Once you have adopted simulation tools and started treating them as a part of your validation process, they will serve as a safety net that enables your robot development process to be both faster and safer, while reducing uncertainty.

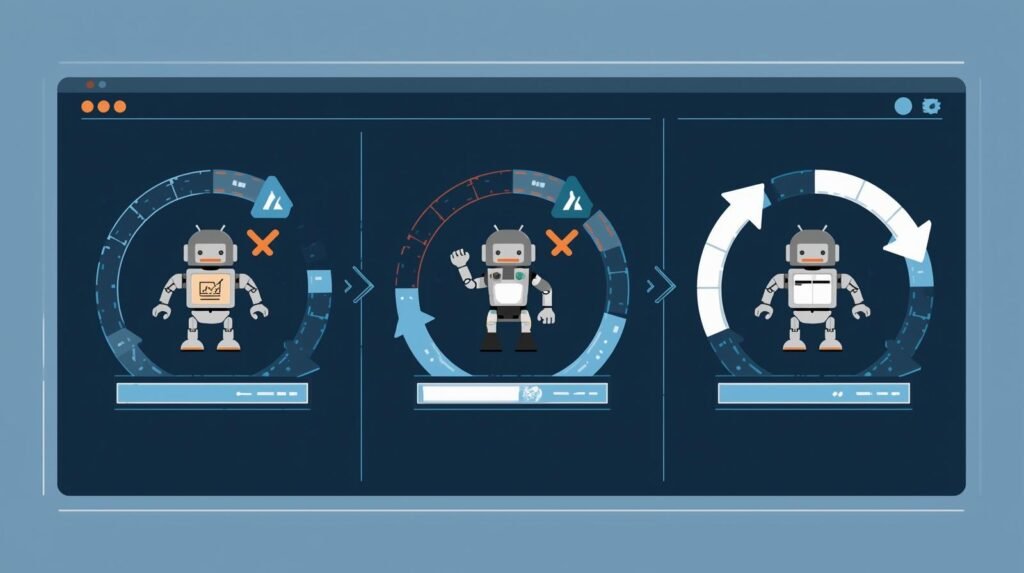

Automated Testing: Automated Tests Validate Robot Behavior Without Manual Effort

Automated testing allows robotic teams to test their robots’ performance repeatedly to ensure their robots perform as intended; they do so through repetitive checking (manual) of the robots’ actions. With automated testing, teams do not have to rerun the same tests manually. Using an automated testing tool, they can execute predefined test cases, record the results, and flag any regression if either the test case execution or the test case itself changes. In conjunction with Virtual Robot Testing, it has become a cost-effective method for demonstrating that your navigation, manipulation, and safety logic will function under multiple conditions (e.g., lighting).

In robotics, a relatively minor adjustment to the robot’s control system can lead to unintended consequences. For example, an adjustment to a controller may allow higher speeds but also result in greater overshoot than before. On the other hand, an adjustment to a perception system may work well in daylight but fails to operate properly in shadows. Automated testing resolves these types of problems by converting a true requirement into a test-check.

This type of check includes, but is not limited to, “No collision,” “Task completed within the allotted time frame,” or “Robot stopped within a safe distance.” Using Virtual Robot Testing, these checks can be executed in a simulated environment where conditions are repeatable, failure rates are low, and the costs of failure are minimized.

A great way to automate your testing process is to define testing levels. The first layer of testing is unit testing, which tests individual components of the code, including math utilities, planners, and state machines. Once you have tested all components individually, you need to test how they work together as a whole; this type of testing is called integration testing. For example, you would want to test how sensor data affects the robotic system’s planning system.

End-to-end testing of an entire mission (such as pick, place, inspect, and/or deliver) is used when you are trying to determine how well the robot performs overall, and includes the collection of metrics such as success rate, total mission completion time, and number of “near miss” events that occurred during the mission. Using virtual robot testing to perform end-to-end testing is a cost-effective way to develop test plans that may be difficult to execute on physical hardware.

Perhaps the most significant advantage of using virtual robot testing and automated testing is the ability to ensure consistent results. Humans are often tired and may fail to complete all required tasks. Additionally, humans may interpret the outcome of their actions in different ways, while automated testing consistently completes the same task(s), and measures the outcome of those actions.

This ensures that, once the test is complete, confidence in the quality of the released software/hardware is significantly increased. In addition to ensuring consistency in the results of the testing, virtual robot testing allows developers to test unsafe or rare event scenarios (for example: loss of sensor input; sudden appearance of an obstacle; slippery surface; or incorrect part orientation). Testing these scenarios in a virtual environment reduces the risk of damaging equipment.

In order to successfully implement automated testing within your development process, you should define measurable acceptance criteria prior to building a library of tests. These acceptance criteria should represent typical usage scenarios.

Your test library should include logging and replay capabilities to enable quick failure diagnosis. Finally, integrate automated testing into your continuous integration process so that all automated tests are executed automatically after each developer commits changes to the source code. Ultimately, virtual robot testing and automated testing create a positive feedback loop: bugs identified during testing are added to the test library, and each successive version of your product becomes more reliable with less human intervention.

Virtual Testing: Identify Failures Early Using Virtual Robot Tests

Virtual Testing allows Robotics Teams to identify failures early by simulating how their Robot behaves before real-world demonstrations. Virtual Testing allows you to demonstrate motion, sensing, and decision-making without causing physical damage to your Hardware, downtime in production, or injury to people involved. Structuring Virtual Testing as Virtual Robot Testing provides a systematic way to verify robot performance across multiple scenarios.

One major benefit of using Virtual Testing is that it allows you to identify problems earlier than you would have identified them with traditional methods. Rather than finding out about a collision on the day of commissioning, you can identify clearance issues, unachievable poses or unstable trajectories through the use of Simulation.

For Mobile Robots, Virtual Testing enables identification of Navigation Failures, such as poor Obstacle Avoidance, Localization Drift, or brittle Recovery Logic. Virtual Robot Testing Suites allow Teams to verify that new features do not reintroduce older Bugs and do not introduce New Ones.

Given the highly variable nature of Robotics, Virtual Testing is most valuable when the “stress” conditions are accurately represented. This means you can include Sensor Noise, Lighting Changes, Reflective Surfaces, Payload Shifts, Slippery Floors, Random Obstacles, etc., into Virtual Robot Testing. These conditions provide a catalog of scenarios that will be representative of all conditions your Robot will see outside of your Lab. Rather than hoping your Robot will perform well at the edge of its capabilities, Virtual Testing demonstrates those capabilities and provides you with evidence.

Speed and coverage are other big positives. Real robots have limitations due to availability, safety considerations, and setup time. In contrast, simulated robots can run much faster than real-time robots and can also run concurrently with other machines, enabling hundreds or thousands of simulations to be run within a very small window of time. With virtual robot testing, you can follow metrics such as success rate, completion time, number of collisions, minimum safe distance from an object, and time to recover from failures. As a result, virtual robot testing will become a decision-making tool for your company and not just a demo.

To conduct virtual testing effectively, define clear pass/fail criteria aligned with real-world requirements, including safety margins, cycle times, path smoothness, and docking accuracy. Also, automate the simulation and record all results so trends can be identified in real time. Finally, if a failure occurs, use the virtual robot testing system to replay the event and generate logs to quickly identify the root cause. If this process is followed consistently, virtual testing will become a viable safety net for companies to minimize late-stage surprises and build confidence in products before deployment.

Software Robotics: Software-Driven Systems That Control and Simulate Robots

Software robotics refers to software-controlled systems that develop plans for how robots will act (and verify that they have acted) – typically before a physical robot moves. Today, software robotics combines motion planning, perception, human and equipment safety, and device coordination into a single development stack that can be tested, optimized, and released as needed. In combination with virtual testing of robot systems, this enables developers to build robots more quickly, safely, and at scale than was previously possible.

At the control level, software robotics takes user-defined goals and translates them into actions, such as moving to a position, following a path, or grasping an item. Additionally, it handles system constraints, such as joint limits, maximum acceleration, maximum load, and safety zones. Software robotics is also used by several teams to define interfaces for sensors and actuators (e.g., cameras, LiDAR, grippers, motor controllers), enabling the robot to perceive its environment and respond accordingly. Given this reliance on real-world safety, reliability is critical; a small software change can significantly affect it.

Another key area of focus for software robotics is simulation. In addition to using digital models of their environments to ensure that the behaviors developed function correctly in the real world, developers also use these models to test how their robot systems perform under different conditions, particularly when lab time is limited or failure is costly.

Using virtual robot testing, the same behaviors may be executed in multiple, repeated scenarios to determine if the desired outcome occurred; e.g., did not collide, maintained a stable grip, accurately docked, or stopped safely. By converting the requirements into automated tests, virtual robot testing allows development teams to maintain quality as their codebases grow.

Practical workflows often follow this sequence: you develop/validate your behaviors using simulation tools; then you create testing suites (both standard and extreme) for your virtual robots; finally, you validate on your actual robotic hardware. Software robotics makes failure identification easier by including logging and/or telemetry capabilities that enable direct comparison of trace data from simulated and actual environments; you can then adjust parameter values and run additional test suites until performance is as expected.

Software robotics also has advantages outside of engineering: you can use the platform for training (operators can practice procedures in a completely safe environment); you can collaborate (teams can utilize the same simulated setup and reproduce any issues consistently); and you can improve continuously (every bug can be turned into a new test case). Ultimately, the combination of software robotics and virtual robot testing will enable an iterative development cycle that reduces risk, accelerates verification of all changes, and improves deployment predictability.

With the increasing number of companies adopting robotics, software robotics will increasingly represent the key differentiator: the “smarter” the software, the more capable and reliable the robotic system will be.

Virtual Robotics: Explore Robotics Concepts Inside Digital Environments

Virtual Robotics is the practice of developing and learning robot behavior, implementing algorithms, and building overall systems within a virtual environment rather than on physical hardware. Through Virtual Robotics, users can develop an understanding of fundamental concepts (kinematics, control-loop implementation, sensor data collection/interpretation, and autonomous operation) in a safe, easily reproducible environment.

One of the most significant advantages of using Virtual Robotics is the ability to rapidly test and validate changes to your robotic system(s). Users can quickly modify their robots’ dimensions, add sensors, change the terrain on which they operate, and adjust control parameters without waiting hours or even days to see the results.

Due to the ease of testing and validation it provides, Virtual Robotics is highly recommended for use in educational environments and in the early stages of R&D and prototyping, where validating ideas that may be high-risk or cost-prohibitive when applied to physical systems. As users transition from testing ideas to verifying they work as intended, Virtual Robot Testing can be leveraged to formalize those explorations and define clear pass/fail criteria.

Virtual Robot Testing focuses on validating robot behaviors across multiple scenarios, including successful obstacle avoidance, grasp completion, path smoothing, accurate docking, and safe stopping distances. Additionally, by repeating each scenario under identical conditions, Virtual Robotics enables the isolation of root causes and the comparison of versions of the same codebase. It is this level of repeatability that has driven the adoption of Virtual Robot Testing for Regression Suites, where users verify that a recent update did not negatively affect previously operational functionality.

A further advantage of using virtual robotics is that it covers all edge cases. Sensor noise, changing lighting, slippery floors, unexpected obstacles, and communication delays are examples of some edge cases that you can simulate with your virtual robots. With virtual robot testing, you can test hundreds of different combinations and measure the success rate, the time it takes to complete the task, the number of collisions, and how long it takes to recover from an error.

If you want to gain value from using virtual robotics, first determine what you want to learn or develop (example: “Navigate through a crowded hallway” or “Pick parts out of a bin”). Next, create a few small sets of scenarios that reflect realistic operational conditions. After you have done this, use virtual robot testing to automatically test the scenarios, collect data on the results, and play back where things went wrong. As time passes, virtual robotics will become a useful tool for providing a base for training, design iterations, and successful deployments — because virtual robot testing allows you to maintain the ability to measure performance as systems become more complicated.

From Car Factories to Mars: Where Virtual Testing is Used Today

A significant number of industries today are using this technique. The ability to fully program robots in a simulated environment (known as offline robot programming) is revolutionizing manufacturing. In the automotive industry, manufacturers simulate, program, and coordinate thousands of robotic arms to perform every weld and movement for a new car model before producing a single real unit.

In addition, when you have packages delivered to your home, the simulations used by e-commerce companies to create the most efficient paths for their robots, which move around to sort millions of packages at the same time, are felt throughout the supply chain. Companies test hundreds of thousands of ways to move products through a warehouse’s digital twin.

The most impressive use of this technology is likely in space exploration. As NASA prepares to send a multi-billion-dollar rover to Mars, there are no second chances to correct mistakes made during operations. The engineers at NASA use a highly accurate Mars surface simulation to test each command before transmitting it across millions of miles. Engineers practice all the movements required for the rover to navigate difficult terrain, collect a soil sample, and perform other actions to ensure the rover’s operation is safe.

The Catch: Why You Still Need the Real Robot

While these virtual worlds are incredibly powerful, they’re not perfect crystal balls. It’s like you have a really detailed recipe for a cake that has every detail down to how many cups of sugar and when to take the cake out of the oven. If you use the same ingredients and do all of the steps according to the recipe, but if you put the cake in an oven that runs at a few degrees warmer than what was called for in the recipe, you would end up with a cake that doesn’t look identical to the one pictured in the recipe.

Likewise, small differences between the simulated environment and the complex, often unpredictable “real world” (e.g., slight variations in friction, motor temperatures, and/or air pressures) can lead to similar variations in behavior between a real robot and its “digital twin”.

Therefore, while using virtual worlds to test robots has many benefits, the ultimate goal is not to produce an exact replica of how the robot will behave in the “real world”. Rather, the primary goal of testing in virtual worlds is to develop the robotic system’s program to be sufficiently close to the actual system so that only minor adjustments to the robot’s movements are needed to account for real-world variables.

With most of the major issues (pathing and safety rules) solved by engineers in the risk-free virtual world, the physical robot can then be used to make the final adjustments to the robotic system’s movements to account for those minor real-world variables; thus, turning a long and costly process into a short and relatively inexpensive check-up.

Building Tomorrow’s Robots, Today

Virtual robot testing allows engineers to test their robots in a simulated environment rather than on the factory production floor. The advantages of this method are twofold. First, allowing engineers to test robots virtually reduces both the time and cost associated with developing them. Second, using a virtual environment to test robots expands engineers’ opportunities to develop and test new robots and improve existing ones.

The capabilities of virtual robot testing enable engineers to test, evaluate, and revise robots rapidly. As such, the use of virtual environments for robot testing is key to accelerating development, improving efficiency, and enhancing robot quality.

FAQ’s

1. What is Virtual Robot Testing?

Virtual Robot Testing involves testing a robot’s control software within a simulated “digital twin” of the robot and its workspace to confirm how the robot will behave before running the code on the physical device.

2. How does a Digital Twin differ from a 3D Model?

A digital twin is more than a visual representation; it includes the robot’s operating environment and the physics (e.g., gravity, friction, collisions), and it uses the exact program the actual robot will run.

3. How will Virtual Robot Testing Save Time & Money?

It will prevent costly damage and downtime, reduce material waste (paint/weld trials, etc.), enable offline programming, and simulate at speeds higher than the actual operating rate to accelerate iteration.

4. What types of Issues Can Virtual Testing Catch Early On?

Collision detection, impossible-to-reach positions, dangerous movements, suboptimal paths, cycle-time issues, and failure cases, such as an emergency stop or an unexpected obstacle.

5. Will Virtual Robot Testing Replace Real World Testing Completely?

No. Due to the “reality gap” (slight real-world variations such as friction and temperature), the actual robot will require final validation and fine-tuning.

Comments 2